In order to analyze the natural frequencies of a given part, a 3D model with realistic material parameters can be used.

As the eigenfrequencies and eigenmodes are solutions to the generalized eigenvalue-problem $$A\eta = (2\pi f)^2 M\eta$$ we can approximate the solutions on a finite element space using the finite element method (FEM or FEA).

Although there are multiple different ways to solve this equation, we will use iterative methods to minimize the rayleigh-quotient. This makes sense as we are interested in the smallest eigenvalues and both \(A\) and \(M\) can be very large matrices (making an LU-factorization infeasible).

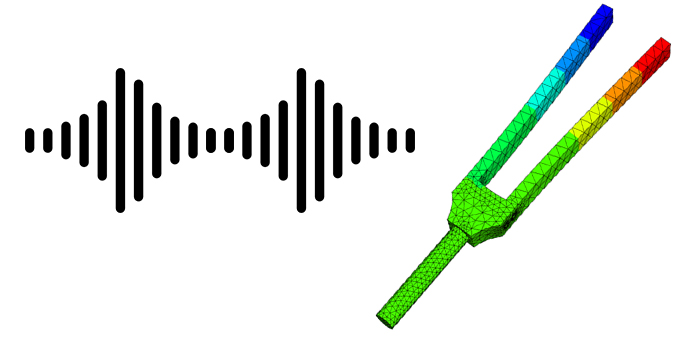

As an example, we will analyze the eigenfrequencies and eigenmodes of a tuning fork. If you are interested in more details you can watch our talk below or download the corresponding pdf.

As mentioned above, we will try to approximate solutions to the generalized eigenvalue problem $$A\eta = (2\pi f)^2 M\eta$$ within a finite element space.

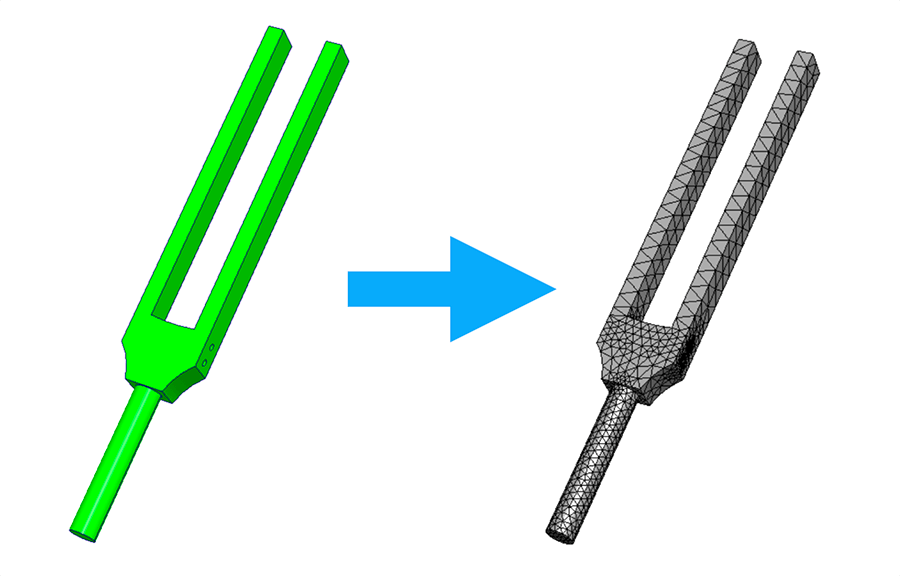

We will therefore start by constructing a mesh for our geometry.

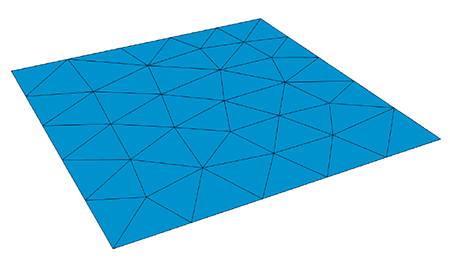

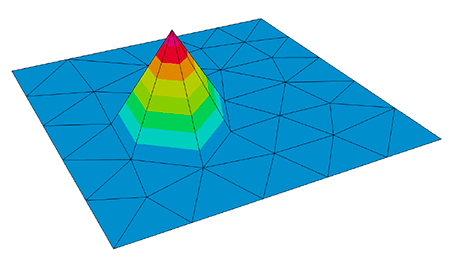

If we now use one hat function \(\phi_i\) per mesh vertex \(x_i\) such that $$ \phi_i(x_j)=\delta_{ij} $$ as basis for a space \(V_h\subset H^1_0\), we can represent each element of \(V_h\) as a linear combination of basis functions. This also applies to the approximation $$\eta^{(h)}=\sum_{j=1}^n \eta_j\phi_j$$ of \(\eta\) within \(V_h\).

Note: In practice, we will use the hat functions mentioned above in combination with higher-order basis functions to get a better approximation.

As our tuning fork is made out of metal, which deforms linearly (up to a point) we can use the model of linear elasticity to define the bilinear form \(a\) with Lamé parameters \(\mu\) and \(\lambda\) as $$ a:=\int_{\Omega} 2\mu\varepsilon(u):\varepsilon(v)+\lambda \operatorname{div}(u)\operatorname{div}(v)dH^n $$ where \(\varepsilon(u):= \frac{1}{2}\left(\nabla u +\nabla u^T\right)\) and \(C:D:=\sum_{ij}C_{ij}D_{ij}=tr(C:D^T)\). And the bilinear form \(m\) as $$ m:=\int_{\Omega}\rho uvdH^n. $$ As both \(a\) and \(m\) are bilinear we can define the stiffness-matrix \(A\) and mass-matrix \(M\) using the basis-functions \(\phi\) as $$ \displaylines{(A_{ij}):=a(\phi_i,\phi_j)\\ (M_{ij}):=m(\phi_i,\phi_j)}. $$ Note that both \(A\) and \(M\) are material dependent.

Now that we have constructed a mesh, a finite element space as well as the stiffness-matrix \(A\) and mass-matrix \(M\) we can use the LOPCG method (A. Knyazev 2000) to approximate the smallest eigenvalue and corresponding mode shape by minimizing the rayleigh-quotient. You can see the LOPCG algorithm below:

For this example, we have implemented the LOBPCG method (block version of LOPCG) using python/NGSolve. You can download the code in the download section below.

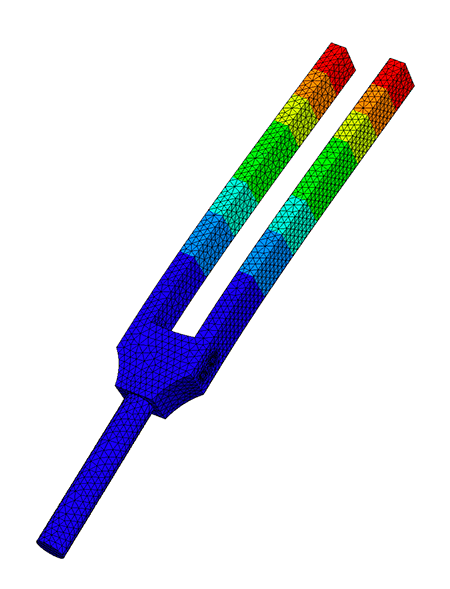

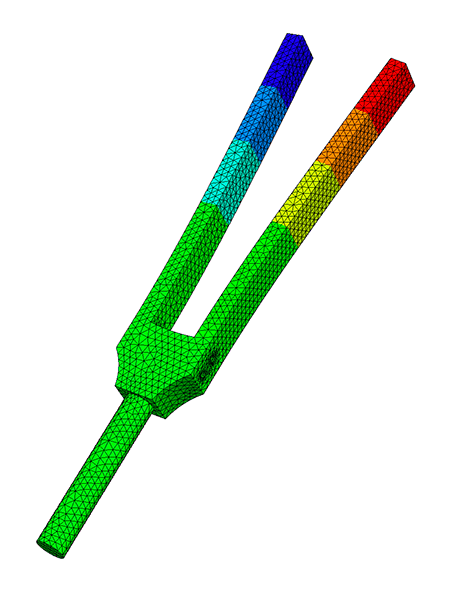

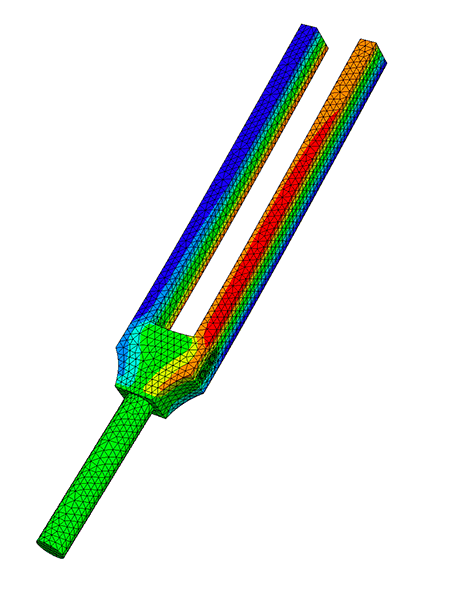

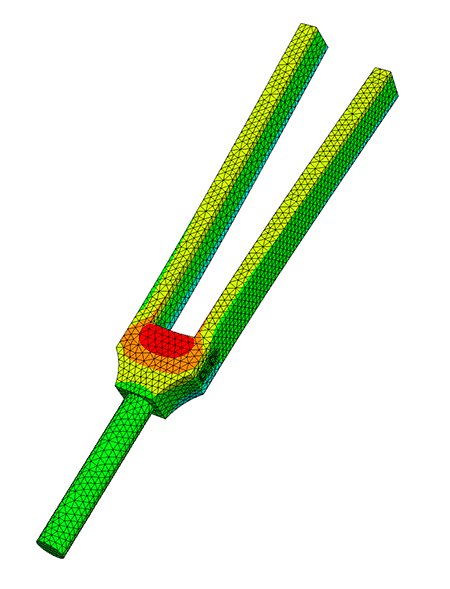

Here we have used Dirichlet boundary conditions on the handle of the tuning fork. You can see the resulting frequencies and mode shapes below:

Note: To make the talk more YouTube friendly we have simplified several aspects and we have omitted the somewhat technical proof for the rate of convergence of PINVIT (A. V. Knyazev and Neymeyr 2003).

Hello, today we want to take a look at how we can calculate eigen frequencies of a part that is given to us as a 3d model using the finite element methods. First, we will quickly go over how we can measure the natural frequency of a physical object and why we might want to calculate it instead. Then, we will go over the main idea of the finite element method and the model of linear elasticity. Before we take a close look at both the LOPSD and the LOPCG method, which we will use to actually calculate the frequencies. As they both use preconditioners, we will also take a look at what these are. At the end we will do a quick demo calculating the natural frequency of a tuning fork using NGSolve. If we have a physical copy of the object we want to analyze, we can measure the natural frequency by exciting it using in this case, a microphone and an oscilloscope. Here the part we are analyzing is a 440 hertz tuning fork. So we are expecting a 440 hertz sine wave and while a signal you see on screen right now might look like a pure sine wave. Using the Fourier transform, we see that it is actually composed of multiple different frequencies to be exact 440 hertz, and the integral multiples like 880 hertz. However, which frequencies are included in the signal is dependent on how we hit the tuning fork. So here you can see I managed to hit the tuning fork in a way that I excite 440 hertz frequency and a higher frequency, which is not a multiple of 440 hertz. But what if we can't easily measure the frequency of objects, or we don't have access to a physical copy? In this case, we want to analyze the Eigen frequencies using a 3d model. We can do this by solving the eigenvalue problem you see on screen now. A eta is equal to 2 pi f squared M eta using, in our case, stiffness matrix A and mass matrix M, which we'll get into more details later. First of all, we would like to mention where such eigenvalue problems might derive from. So if we consider the Laplace eigenvalue problem with Dirichlet boundary data zero, we can multiply the first equation with a bump function and integrate over the whole region omega, we can use the first greens identity to rewrite the integral and our boundary integral vanishes, because we multiplied with a bump function that vanishes on the boundary. So, in the end, we get the weak formulation that will be find a u in H_0^1 such that a(u,v) is equal to lambda m(u,v) for all v in H_0^1. Where small a and small m denote by linear forms that are given above. So if we would like to compute this numerically, it would be impossible because our H_0^1 is an infinite Hilbert space. So the idea is to create a mesh of our region and then try to compute a solution on this finite element space V_h, that is a subset of H_0^1 one and h denotes the finest of our grid. V_h is the span of basis functions that satisfy the following equation that phi_i(x_j) is equal to the Kronecker Delta. And x_j denotes the vertices of the mesh, or the picture on the left we see a mesh on the unit square. And on the right we see a basis function that satisfy the Kronecker delta such that it is one on a fixed vertex and zero everywhere else. So now we can create a linear combination through this basis functions on our finite element space. And we define elements of our matrix A as a(phi_i, phi_j). We can only compute this through the basis functions, because our bilinear forms are linear. And we do the same for the matrix M. If we plug this in, we get two sums and if we simplify this, we get the compact form Au equals lambda Mu. So we again get generalized eigenvalue problem. On the picture on the left, we see the Eigenfunction corresponding to the first Eigenvalue on the unit square. And on the right we see another Eigenfunction corresponding to another eigenvalue. So, coming back to the tuning fork, as we want to analyze metal parts which deform linearly, we'll use the model of linear elasticity to define the matrix A, which depends on the lamay parameters mu and lambda. And our mass matrix depends on the density rho of the material. So both A and M are material dependent. So, if we recreate a 3d model of our tuning fork, we can create a mesh on it, and then calculate a solution our tuning fork will swing. So in the following, we will present a few methods to compute this eigenvalue problem. Now, we want to look at a generalized symmetric eigenvalue problem, which has the form Au equals lambda Mu. Normally, we can solve this kind of problem by applying the shift and inverse iteration method. Therefore, we have to compute our LU factorization for the inverse, which has a complexity of n to the third power. As we use very fine meshes, our matrices A and M have dimensions of about a million times a million, so, we apply a preconditioner to lower the condition number for this problem. According to Neymeyr, the preconditioner has to approximate the inverse of A to a certain degree as stated in this inequality. For the local optimal precondition steepest descent method, we want to minimize the Rayleigh quotient for A and M being symmetric and positive definite matrices. Where the rayleigh quotient is defined as the scalar product of u, Au over u,Mu, we know that its minimum is equal to the smallest eigenvalue lambda one. So our goal is to generate a sequence of nonzero vectors by using the gradient of the really quotient. Now we want to compute the gradient of the Rayleigh quotient. Therefore, we use the symmetry of A, so A is equal to A transpose and the derivative of x transpose times A times x is equal to two times X transpose times a using both of the mentioned equalities and the quotient rule, we end up with the gradient of the Rayleigh quotient is equal to 2 over U transpose Mu times u minus lambda u Mu. As we want to get a precondition iteration form because for A being poorly conditioned to problem itself is poorly condition, we use a T based scalar product according to Neymeyr and can Knyazef we obtain that the gradient with respect to T inverse of the Rayleigh quotient is equal to two over U^T Mu times T inverse times Au minus lambda u Mu. As we know that the gradient is the vector with the steepest descent we can formulate our iteration as u_{i+1} is equal to u_i minus alpha_i omega_i with omega_i being the gradient of the Rayleigh quotient multiplied by a scalar. So, as our goal is a fast convergence rate, we have to find a good choice for alpha. Possibly choice is alpha being equal to one, this is the so-called precondition inverse iteration or PINVIT. For better convergence, we choose alpha according to the Rayleigh-Ritz method. Therefore, we minimize the Rayleigh quotient on the subspace span(u_i, omega_i). So we end up with a two times to generalize the eigenvalue problem of the form ay equals lambda my with two matrices a and m as we can see here, the solutions of this problem are the roots of the quadratic characteristic equation. Now, we can rewrite the iteration is u_i plus one is equal to xi_i u_i plus delta_i omega_i, which are the components of the Eigenvector y to the smallest Eigenvalue. Implementing an algorithm for a LOPSD method can be done as shown here. We first have to define a stopping criterion for our algorithm. Therefore, it is convenient to choose the T inverse norm of the residuum r_i as r_i times T inverse times r_i is equal to r_i times omega_i. And we just have to calculate the scalar product of these two vectors, we start with an arbitrary vector u_0, and the corresponding lambda_0. The most important part of the algorithm is the minimization part of the Rayleigh quotient for the pencil A minus lambda M, and the subspace span(u_i, omega_i). By applying the Rayleigh-Ritz method, we obtained the Ritzvalue and Ritzvector. And with them, we can calculate our next iterate u_{i +1}. After this procedure is done, we end up with an approximation to the smallest Eigenvalue and the corresponding Eigenvector. This algorithm can be implemented with complexity O(n) since the matrix-vector multiplication in line three is cheap, because we have sparse matrices. Furthermore, the Rayleigh-Ritz method has the same complexity. By using NGSolve objects, we can implement the previously mentioned algorithm in a compact way as shown here. In this case, we calculated the first 20 iterates for a fixed number of ascending Eigenvalues starting with the smallest. Unfortunately, the LOPSD method described by Christoph converges rather slowly, and you can see why in this picture. So the LOPSD method is represented by the green line. And as you can see, it goes in this zig-zag pattern, what we would much rather do is represented by the red line. And this is to go in each direction just once and avoid the zig-zag pattern. And we already know this from a linear system of equations as the CG method. So what you would like to do is use the CG method to minimize the Rayleigh quotient. And therefore, we need search directions where consecutive search directions are A-orthogonal, which means that A scalar product is zero. And here the difference to a linear system of equations will be that the functional is not quadratic, which means we are losing the finite termination property. But this is not a problem. In this case, as we are talking about very large matrices. And we are not interested in doing a million iterations anyways. So this is not really a problem. We start similar to the LOPSD method by defining r_i as the residual after step i. And this is equal to the gradient of the relay quotient minus a scaling factor. But in this case, we're only interested in the direction. So we are not interested in the length of this vector. And as you saw in the picture, the first search direction is equal to the gradient. But afterwards, we use the search directions p_i, which are according to a paper from Feng and Owen, A-orthogonal for the generalized eigenvalue problem. And so what we can do now is assuming that the current approximation is u_i, we can get a better approximation u_{i+1} in a way similar to the LOPSD method by choosing xi_i and delta_i, such that u_{i+1} minimizes the Rayleigh quotient. But now we are using the search direction p_i, which we have defined as omega_i, which is the preconditioned residual plus beta_i times p_{i-1}. And so we can see that because p_i is defined a sum, there might be an improvement if we optimize over all three parameters at once, and not just over xi and delta. And so we ended up with the form for u_{i+1} as xi_i times u_i minus delta_i omega_i plus gamma_i p_{i-1}. And so by optimizing all three parameters at once, this method is locally optimal. And here you can see the corresponding algorithm. And one important change is here we have an index shift for p. So before we use p_i and p_{i-1}, but it is much easier to implement if you use p_i and p_{i+1} instead. So, we will initialize p_0 as zero and p_1 as the preconditioned residual. Whereas before we started with p_0 equal to the preconditioned residual. Now we can start by calculating the residual r, which is equal to Au minus lambda and Mu. Next we can calculate a preconditioned residual. And then we check if our approximation has reached a given exactness tau using the preconditioner norm. Now, the only difference to the LOPSD method is that we are minimizing over the three dimensional trial subspace with basis vectors omega_i and u_i. And now the new vector p_i, which is the new search direction, then we can update our guess, lambda_{i+1} as the smallest to its value, and u_{i+1} as the corresponding Ritz-vector. And as Christoph described earlier, we multiply the Ritz-vector corresponding to lambda_{i+1} with the basis vectors, omega_i u_i ans p_i. And at the end, we calculate the new search direction p_{i+1}. And we end up with an approximation of the smallest eigenvalue and corresponding eigenvector. As for complexity, because the only change we did was to increase the number of basis vectors of our trial subspace, we need to take a look at the complexity of the Rayleigh-Ritz method. But as Christoph told us, this is O(n). And so the overall complexity of the LOPCG method does not change compared to the LOPSD method, it says O(n) for sparse matrices. And you can see this behavior on this plot. This is a log-log plot. Here we have calculated the smallest Eigenvalue and the corresponding Eigenvector for the Laplacian on the unit square using a different fineness of mesh. And you can see on the x axis we have the degrees of freedom. And on the y axis, we have the time per iteration. And for reference, the gray line represents linear complexity, O(n). And as you can see the two dashed lines which represent a polynomial fitted to the actual data are almost parallel, and they are also parallel to the reference, O(n). And one thing you'll probably notice is that the extra data represented by the triangles on the left hand side of the screen is not very close to the polynomial fitted for the LOPCG method. And this is because for a coarse mesh, theLOPCG method converges so fast that a small change in the overall time as a big effect on the time per iteration. As for convergence, the LOPCG method converges at least as fast as the LOPSD method as the trial subspace used in the LOPSD method is included in one use in the LOPCG method. Unfortunately, there's not yet an adequate theory of convergence known. And here you can see a comparison of the rate of convergence of the LOPCG and LOPSD method using two different preconditioners. So on the left hand side, we have the local preconditioner, which is not very good, but mon preconditioners later, and we can see that for a bad preconditioner. The LOPCG method converges much faster than the LOPSD method, whereas using a good preconditioner like multigrid the LOPCG method converges faster, but not as much. Overall, the LOPCG method seems to be much less dependent on a very good preconditioner. So the LOPCG method converges fast for both a good preconditioner and a bad preconditioner. If we now want to compute more than one Eigenvalue, let's say that m smallest Eigenvalues, we can use the m smallest Ritz-values as an approximation. So for example, if we want the third smallest Eigenvalue, we would use the third smallest Ritz-value. And we will also increase the number of basis vectors of our trial subspace. So, in this example, we would use omega_1 through omega_m u_1 through u_m and p_1 through p_m In order to approximate the m smallest Eigenvalues. So how do we choose the block size m? Well, of course, we include the Eigenvalues of interest. But we also want to choose m such that there is a large gap between the Eigenvalue lambda_m and lambda_{m+1}, because according to Knyazev, this leads to faster convergence. However, as we also have to increase the number of basis vectors of our trial subspace, and the Rayleigh-Ritz method scales with complexity O(Nm^2), we can't make the block size infinitely big. You can see this behavior in this plot. Here we are calculating the m smallest Eigenvalues of the Laplacian on the unit square. on the x axis you have the number of Eigenvectors we are calculating and on the y axis, there is the time per iteration. And here we are actually achieving complexity O(n), which is probably due to the implementation in NGSolve. One further improvement we can do is as not all Eigenvectors and Eigenvalues converge at the same rate, we can try to use an index set J to keep track of which Eigenvectors have already which a given exactness. And this way we can ignore Eigenvectors that have already converged adequately. So, what we can do is we can use the subspace with basis vectors omega_j, and these are the precondition residuals of Eigenvectors that have not yet adequately converged together with u_1 trough u_m, and p_1 through p_m. And this way, we don't have to calculate the residual and preconditioned residual. For Eigenvectors that have already converged good enough. Using this method locked or ignored, Eigenvectors can still change. So this method is called soft locking. We've already heard in the section of the LOPSD that the preconditioner is used to accelerate the convergence of our iteration. If we choose a pre conditioner T, T inverse should approximate A inverse as much as possible. In other words, T inverse times A should be near the identity matrix. Preconditioners for linear system are studied very well and the same preconditioners useful in linear systems can also be used for Eigenvalue problems. So in the next few steps, we'll just consider linear systems. If we start from a system Ax=b, we can rewrite this equation to an iterative form x_{t+1} is equal to I minus T inverse A x_t plus T inverse b. And this iteration converges if the iteration matrix I minus T inverse A has a norm less than one. And the Banach fixpoint theorem assures us that convergence. So moving on, we'll discuss three different preconditioners the most simple one is just the Jacobi preconditioner. So if we take the matrix A and we'll split it up in a lower diagonal and upper matrix, we just take the diagonal elements of the matrix as a preconditioner, so T inverse is pretty easily computable. And again, this converges if this iteration matrix has a norm less than one, the Jacobi preconditioner is pretty good, if the matrix A is diagonal dominant, but most of the times that is not the case and this iteration converges pretty slow and hence not recommendable. Since we are considering symmetric and positive definite matrices, it is recommended to use a Cholesky decomposition over a LU decomposition. So if we want a preconditioner with a Cholesky decomposition, we tried to create an almost Cholesky composition of the matrix A. In other words, we want A is equal to L times L star plus R where R denotes the error matrix in praxis with don't execute the error matrix, we just compute L times L star, that we choose that as a preconditioner. A possible way to compute this incomplete Cholesky decomposition would be to only consider non zero entries of the matrix A. And if we compute the decomposition, the zero elements of the matrix A also becomes zero elements of our factorized matrix L. Since we're considering sparse and very large matrices, this incomplete decomposition might not be a proper approximation to our inverse matrix A, such that if we want a better approximation, we use fill ins. In other words, zero elements of the matrix can become nonzero after the decomposition. If we plug in our preconditioner T, into the iteration, we get the following iteration again. And this again converges if the iteration matrix has a norm less than one. multigrade is a pretty complex idea, but often use over the Jacobi and direct In other words, the incomplete Cholesky decomposition. The idea is to accelerate the convergence of the iteration by using coarser grids. So the basic idea is to reduce high-frequency errors by solving a coarse problem, we're not going too much in detail, but we'll give a brief idea. So starting from iteration point, x_td, and h denotes on which grid we are in so we starting from the fine grid. So first of all, we apply a presmoother that could be a Jacobi or a Gauss-Seidel preconditioner for only three to five steps. After that, we compute the residual and restrict that to a coarser grid. In other words, we project that to a coarser grid. And on this coarse grid, we compute the error of a e that he is the error, a e equals the residual. After we've computed the error, we interpolate it back to the fine grid, and we add it to our x_t, again, on the fine grid. And after that, we apply a postsmoother again, for three to five steps and we get the next iteration step. And we compute this iteration until we get the convergence. In other words, until the error gets to zero. In the end, we would like to compare these three preconditioners. So based on our initial Laplace eigenvalue problem that we discussed in the introduction, so we compare the multigrid, the direct the direct would be the incomplete Cholesky decomposition at the local would be the Jacobi preconditioner. We see that the Jacobi preconditioner converges pretty slow, because our matrix A is not diagonal dominant, and hence, it doesn't approximate the inverse matrix pretty well, that we see that multigrade and direct are the preferred preconditioners. Okay, so now that we have order theory out of the way, we can actually simulate our tuning fork from the beginning and you can see the code right now. So, here we have created a geometry class and Eigenvalue solver class. So we start loading the geometry as a step file, and then we can easily generate a mesh and here we are using the multigrid preconditioner. And then we use the LOBPCG method to calculate the 10 smallest Eigenvalues. And if we take a look at the output of this program, we can see the 10 smallest Eigenvalues and we can also visualize the Eigenfunctions. So here we have, maybe I'll make this window a bit bigger. So here we have a 3d model we are analyzing. And we can also take a look at the mesh and if we now take a look at the solution. Let me first of all activate deformation and turn on color. So, here we can see the Eigenvector corresponding to the smallest eigenvalue, which is 414 hertz, but, as we saw in the beginning the Eigenfrequency we are interested in is 440 hertz. So, this is the second smallest eigenvalue. So, down here we can change which Eigenfunction we want to visualize, so, and we can see that here both prongs swing mirror-inverted, which is what we expected. And we can also take a look at other Eigenfunctions like this one for example, here both prongs swing similar to the 440 hertz Eigenfrequency but with a higher frequency and like this

We compare different iterative methods (including LOBPCG (A. Knyazev et al. 2007)) for computing eigenfrequencies and the corresponding eigenmodes in a finite

element space. We analyze both numerical complexity and convergence of each

algorithm and provide reference implementations using NGSolve.

The most suitable method is then used to analyze a clamped-free beam and a

tuning fork with realistic material properties.

We compare our results to the Euler-Bernoulli beam-theory and measurements

done on a real world model.

The content published on this page (with exceptions) is published under the CC Attribution-NonCommercial 3.0 Unported License. You are free to share on commercial blogs/websites as long as you link to this page and do not sell material found on blog.hirnschall.net/* or products with said material.